Object storage governance#

Dell Data Analytics Engine, powered by Starburst Enterprise platform (SEP) includes support for governance on object storage data sources. SEP provides an endpoint that allows users and clients to connect to object storage catalogs as an alternative method to remote or on-premise object storage endpoints.

Governance on object stores include the following main features:

Applying built-in access control security for incoming object storage requests, including table access control where the requested object storage path is mapped to a table known to SEP.

Support for dynamic object storage request routing to different remote object storage endpoints, based on the request path or user credentials.

Support for built-in access control access log. Access decisions for object storage requests are logged and persisted.

Configuration#

Object store governance requires built-in access

control and location access

control to be enabled. Set the following properties in

your catalog configuration file to true:

starburst.access-control.enabled=true

starburst.access-control.location.enabled=true

Catalog metadata synchronization#

Catalog metadata synchronization enables synchronization of metadata from the catalog’s metastore to the SEP-embedded metastore.

Synchronizing metadata is required for object storage governance functionality in Dell Data Processing Engine. SEP uses the metadata to associate object storage requests with the corresponding SQL tables, enforcing BIAC permissions when verifying access to object storage paths.

After the metastore sync, SEP checks table privileges instead of storage

location privileges. For SELECT, INSERT, UPDATE, or DELETE operations

on object storage tables, only table privileges are used. Location privileges

are not checked. If you do not have the right table privileges, you get an error

when attempting to access the data. Make sure to set the correct table

privileges for Spark jobs that need to access tables after

a metastore sync.

Metadata synchronization is supported in the Hive, Delta Lake, and Iceberg connectors.

Configuration#

To enable catalog metadata synchronization, set the

hive.metastore.sync.enabled, delta.metastore.sync.enabled, or

iceberg.metastore.sync.enabled property to true for the appropriate connector

in your setup.

Additionally, the following configuration properties are available:

Property |

Description |

|---|---|

|

Enable synchronization of the catalog metastore data to SEP’s embedded

metastore. Defaults to |

|

The name of the cluster in the centralized metastore for metadata

originating from this catalog. Defaults to |

|

The user who is impersonated in the Hive metastore connection for metastore synchronization. Defaults to an empty string. |

|

Specifies how often the metastore synchronization job runs. Accepts values

from |

Property |

Description |

|---|---|

|

Enable synchronization of the catalog metastore data to SEP’s embedded

metastore. Defaults to |

|

The name of the cluster in the centralized metastore for metadata

originating from this catalog. Defaults to |

|

The user who is impersonated in the Delta Lake metastore connection for metastore synchronization. Defaults to an empty string. |

|

Specifies how often the metastore synchronization job runs. Accepts values

from |

Property |

Description |

|---|---|

|

Enable synchronization of the catalog metastore data to SEP’s embedded

metastore. Defaults to |

|

The name of the cluster in the centralized metastore for metadata

originating from this catalog. Defaults to |

|

The user who is impersonated in the Iceberg metastore connection for metastore synchronization. Defaults to an empty string. |

|

Specifies how often the metastore synchronization job runs. Accepts values

from |

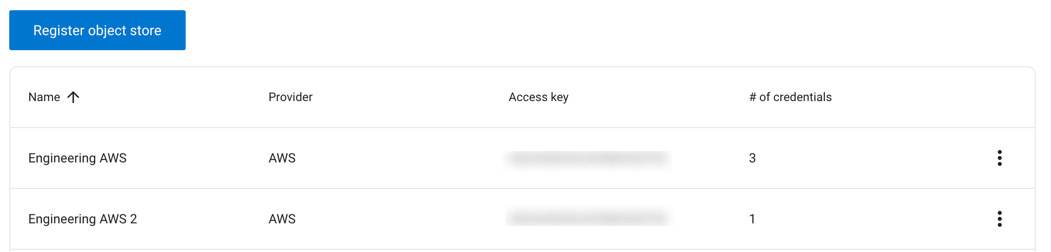

Registered object stores#

A registered object store is used as the remote object storage destination when the credentials in the request are linked to an object store. When credentials are linked to an object store, that object store is always used when using those credentials.

Additionally, if an object store is marked as default, it handles all requests not explicitly associated with another object store.

By registering object stores, administrators can dynamically specify multiple physical storage locations for SEP to forward object storage requests. To register an object store, you must provide credentials to authorize requests.

When object storage governance is enabled, the

Object stores pane becomes available in the Starburst Enterprise web UI for roles with the

Object stores UI privilege.

Object stores UI#

To view object stores from the Starburst Enterprise web UI, select the Object stores pane in

the Dell Data Processing Engine section of the navigation menu. The

Object stores pane is available for roles with the Object stores UI

privilege.

The following information about your registered object stores are displayed:

Name: The name given to the object store.

Provider: The provider for the object store, either AWS or the endpoint value provided at registration.

Access key: The access key for the object store.

# of credentials: The total number of credentials registered with the object store.

Click the options menu for an object store and select Set as default to set a default object store.

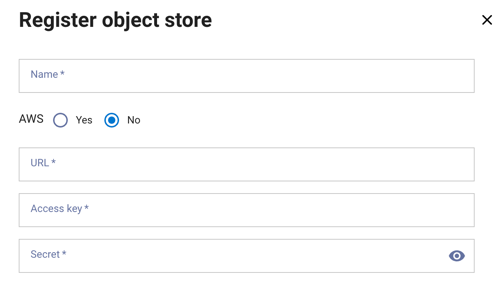

To register an object store:

Enter a name, URL, access key, and secret for the object store. If you are registering an AWS object storage location, choose the Yes radio button. Click Register to complete the registration.

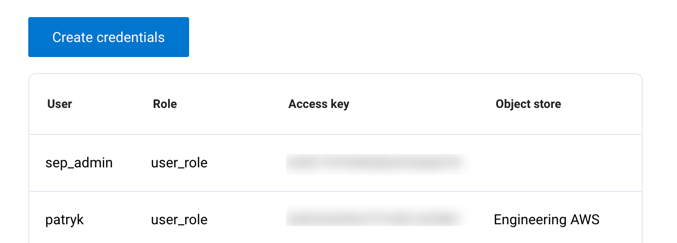

Credentials UI#

To view object store credentials from the Starburst Enterprise web UI, select the Credentials

pane in the Dell Data Processing Engine section of the navigation menu. The

Credentials pane is available for roles with the Emulated credentials UI

privilege.

Credentials are listed with the following information:

User: The name of the user who the credentials are created for.

Role: The role that the user is assuming when using the credentials.

Access key: The access key for the object store.

Object store: The registered object storage location.

Click Revoke to revoke credentials from the user.

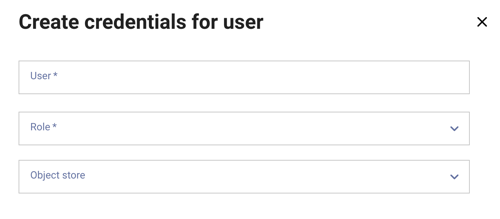

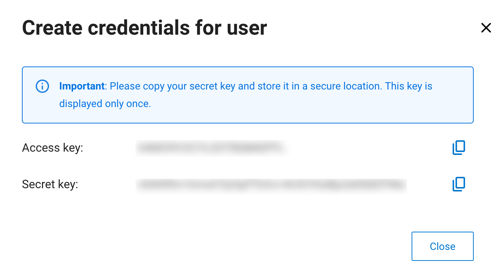

To create new credentials for an object store, click Create credentials:

Enter the user’s name, their role, and select the object store to create credentials for and click Create.

On the next pane, copy the secret key value and store it for later use. This is the only time you can see this key. The access key and the secret key values are automatically generated.

Note

Credentials are the only supported authentication method for AWS.

Confirm that you saved the secret key, then click Close.

Dell Data Processing Engine keeps track of the number of credentials a given object store has in the Object stores UI pane.

Example workflow#

The following details an example workflow for accessing object storage with Dell Data Processing Engine:

Register an object store with the following example attributes:

Name:

EngineeringProvider:

CustomURL:

https://storage.domain.comAccess key:

ASXHXAPAH7YVXD16Z98VSecret:

<hidden>

Create credentials for the registered object store with the following example attributes:

User:

test_userRole:

demo_spark_userObject store:

Engineering

Ensure the querying role has the necessary privilege grants to access the object store location.

Submit a job from the job submission pane with the following example attributes:

Job name:

test-jobJob details:

org.apache.spark.examples.SparkPiResource pool:

defaultApplication:

s3://dell-spark-demo/examples/jars/spark-examples_2.12-3.5.3.jar

View the logs from the job’s options menu.